MRI analyzes throat movements and converts lip-syncing into text Helping computer users such as those with language disabilities

Real-time vocal tract MR image and spoken text information

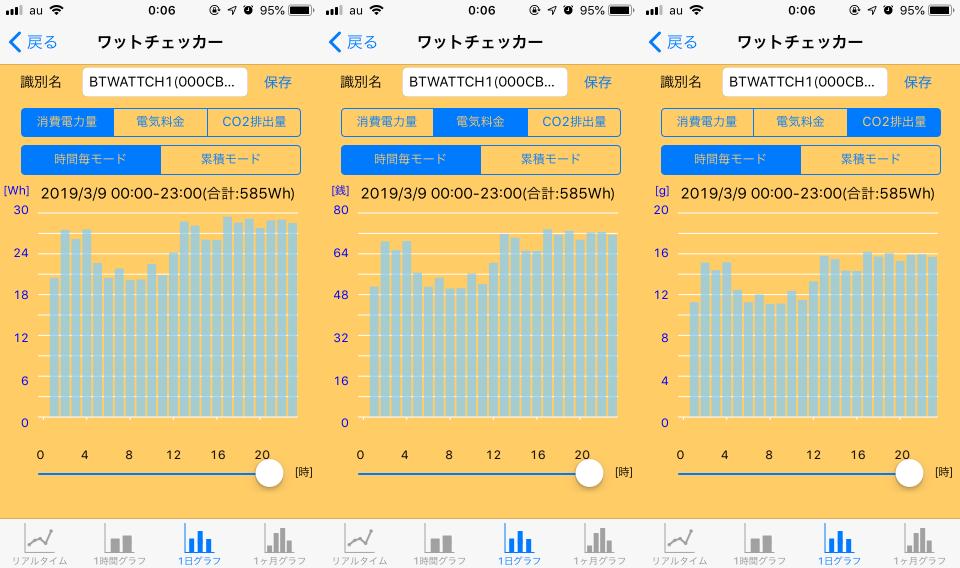

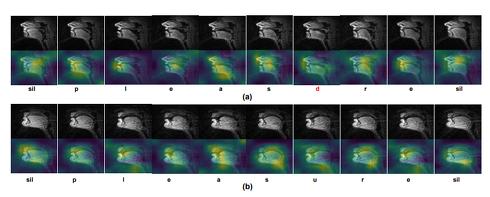

``Silent Speech and Emotion Recognition from Vocal Tract Shape Dynamics in Real-Time MRI'' developed by a research team at the University of California, Merced uses real-time magnetic resonance imaging ( It is a deep learning framework that acquires by rtMRI), understands the acoustic information from the movement and converts it to text. [Image] (Top) MR image of the vocal tract, (Bottom) Display of vocal tract boundaries The vocal tract refers to the hollow area through which the sound produced by the vocal cords passes before being emitted outside the body. In this research, we observed this vocal tract using rtMRI and verified whether continuous speech can be recognized from the acquired movement. For continuous speech recognition, we constructed an end-to-end recognition model (articulatory-acoustic mapping) by deep learning that can automatically estimate acoustic information corresponding to a specific vocal tract configuration. To understand whether and how emotion influences articulatory movements during speech production, we investigated each subregion of the vocal tract (pharyngeal, palatal, dorsal, hard palate, lip constriction). We analyzed the changes in the articulatory shape in (part) according to different emotions and genders. The recognition model of this research consists of two submodules. A feature extraction front-end module that receives a sequence of video frames captured by rtMRI and outputs feature vectors for each frame, and a sequence modeling module that inputs a sequence of feature vectors for each frame and predicts and outputs sentences character by character. More than 4000 videos of 10 speakers were used for the dataset. Includes MR images of vocal tract formation, synchronized audio recordings, and temporally matched word-level transcriptions. To measure the performance of the proposed model, we calculated the phoneme error rate (PER), character error rate (CER), and word error rate (WER), which are standard metrics for the performance of automatic speech recognition (ASR) models. verified. As a result, it was demonstrated that the sequence of vocal tract formation could be automatically mapped to the entire sentence while keeping the PER to an average of 40.6%. Furthermore, when we performed an analysis relating emotions (neutrality, happiness, anger, and sadness), the subregion of the lower boundary of the vocal tract was more prominent than the subregion of the upper boundary in all emotions other than neutrality. was found to have a tendency to change to Changes in each subregion were also affected by gender variation, with female speakers exhibiting greater distortion in the pharyngeal region and the cleft palate/dorsal region during negative emotion than positive emotion. rice field. This result indicates the possibility that this recognition model can be used as an input medium with various computers that are used in daily life, and that it can be used by people with speech disabilities, speech disabilities, and visual impairments to interact with computers. Suggested that it might help. Source and Image Credits: Laxmi Pandey and Ahmed Sabbir Arif, “Silent Speech and Emotion Recognition from Vocal Tract Shape Dynamics in Real-Time MRI”, SIGGRAPH '21: ACM SIGGRAPH 2021 Posters, August 2021, Article No.: 27, Pages 1 -2, https://doi.org/10.1145/3450618.3469176 * Written by Hiroki Yamashita, who presides over the web media "Seamless" that introduces the latest research in technology. Mr. Yamashita picks up highly novel scientific papers and explains them.

ITmedia NEWS

Last update: ITmedia NEWS

![[EV's simple question ③] What is good for KWH, which represents the performance of the battery?What is the difference from AH?-WEB motor magazine](https://website-google-hk.oss-cn-hongkong.aliyuncs.com/drawing/article_results_9/2022/3/9/b2506c4670f9f2cb45ffa076613c6b7d_0.jpeg)

![[How cool is the 10,000 yen range?] 1st: The performance of the "robot vacuum cleaner with water wiping function (19800 yen)" like Rumba is ...](https://website-google-hk.oss-cn-hongkong.aliyuncs.com/drawing/article_results_9/2022/3/25/5251bb14105c2bfd254c68a1386b7047_0.jpeg)